Deep Statistical Model Checking is a new technique to get insights in the quality of a policy induced by a neural network trained on a specific task which is used as a scheduler later to determinize the MDP model of a similar task.

In the original work we considered the simple Racetrack use case in which the neural network has been trained on the task of reaching the goal with a probability as high as possible while crashing as rarely as possible. For the resulting stochastic process, we harvest state-of-the-art statistical model checking techniques of The Modest Toolset to study the detailed behavior of the net. More concretely, we treat the NN as a black box to resolve the nondeterminism in the given MDP of the model. The NN gets a description of the current state and returns the action to apply next. The statistical analysis of the resulting Markov chain and thereby of the NN properties gives insights in the quality of the NN, not only for the whole task but also for specific regions of the Racetrack.

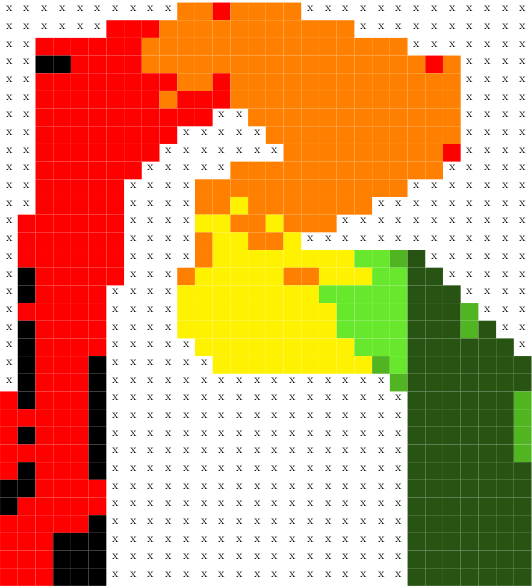

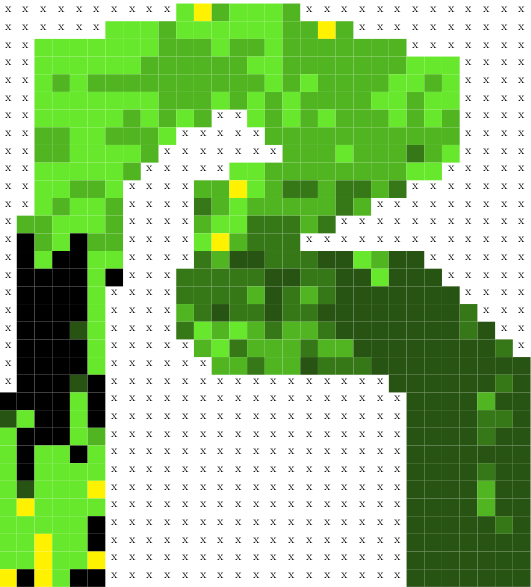

An impression of this quality analysis is given in the figure below, where a simple heat map visualizes the chance to safely reach the goal if starting in each of the cells along the track. On the left, the goal probabilities are smaller, which indicated that the net could be retrained to achieve better results.

DSMC enables the inspection of risky driving behavior induced by the NN. Such information can be used to retrain the net in a certain area of the map to improve quality or to see if the net prefers a specific route over an equivalent one. In a nutshell, DSMC provides a scalable verification method of NNs.

The whole artifact of the DSMC work is available online. In this artifact you can find a document with additional explanations about the modelling details, the executable of The Modest Toolset with the Oracle option to resolve nondeterminism in modes used in the paper, the scripts used for learning as well as the weights and biases of the final NNs and the logfiles for the experiments presented in the paper.

The video of the FORTE 2020 conference talk, where we presented DSMC:

It is also available here.

In DSMC Evaluation Stages: Fostering Robust and Safe Behavior in Deep Reinforcement Learning we demonstrated that DSMC can be applied during deep reinforcement learning to determine state space regions with weak performance to concentrate on them during the learning process.

Deep Reinforcement Learning (DRL) technology is not without its failures, especially in safety-critical applications: (i) the training objective maximizes average rewards, which may disregard rare but critical situations and hence lack local robustness; (ii) optimization objectives targeting safety typically yield degenerated reward structures which for DRL to work must be replaced with proxy objectives. Here we introduce methodology that can help to address both deficiencies. We incorporate evaluation stages (ES) into DRL, leveraging deep statistical model checking (DSMC) which verifies NN policies in MDPs. Our ES apply DSMC at regular intervals to determine state space regions with weak performance. We adapt the subsequent DRL training priorities based on the outcome, (i) focusing DRL on critical situations, and (ii) allowing to foster arbitrary objectives. Our results show that DSMC-based ES can significantly improve both (i) and (ii).